A team at MIT studied the impact AI usage has on the brain, and came to this as a conclusion by the end of their

study; "The use of LLM had a measurable impact on participants, and while the benefits were initially apparent,

as we demonstrated over the course of 4 months, the LLM group's participants performed worse than their

counterparts in the Brain-only group at all levels: neural, linguistic, scoring."1

While not a physical resource like water or power, the decline mentioned above is still a concern - with people

thinking less about what kind of information they use and consume, a variety of issues can appear - be it more

misinformation, more "disconnectedness" or overreliance on a sycophantic tool.

1. (Kosmyna et al., 2025)

What are some positives?

For one, AI makes us far more productive2. As it can target information faster and - while maybe not

legally - can potentially access more than what a single person could. With faster information gathering, people

can write, create, or just simply know more without having to potentially dig for long stretches of time. AI

allows for further productivity in the fact that it can just write or create what one asks it to, skipping the

work process directly to the end product. A drawing? Done! A short essay? Easy!

While not directly connected to the brain itself, education fits well here as a topic: AI could potentially have great uses for both categories, educators and students. AI can be helpful for cleaning or creating lessons for instructors, and students could find aid in summarizing or recontextualizing content that they might not have understood, alongside giving them immediate feedback instead of waiting for it3. In the future, AI could even be used for grading with the educator's help.

2. (Liu et al., 2025)

3. (Office of Communications, 2024)

What are some negatives? Light content warning for suicide

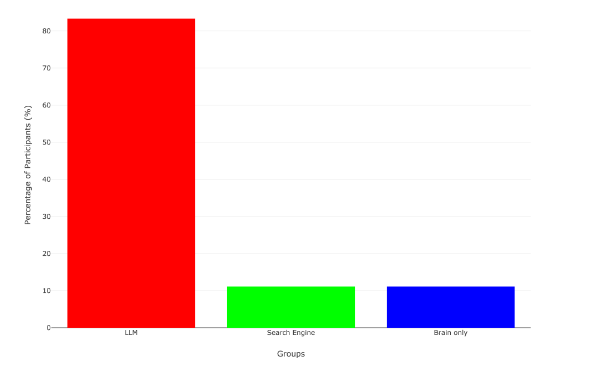

As mentioned in the blurb above, the ability for us to actually communicate what the AI generated for us; the rates in which we can explain topics or concepts is significantly lower for LLM users versus Google users versus just the brain - in using AI, we cannot communicate, create, nor comprehend as well as if we do the work ourselves. For example, here is a graph of 3 groups - each with a different tool - demonstrating "the percentage of participants within each group who struggled to quote anything from their essays" in their first session.4

For source, see (Kosmyna et al., 2025) in References

While we may be more productive solely off speed alone, the trade off is that we become more passive and disconnected. The end product is generalized, lacks voice or soul, and lacks the imperfections that make creations human - it can be mechanically amazing, but it will never offer the same amount of conviction and story behind it that truly makes a piece of media worth finishing.

In terms of education, AI has far too many current pitfalls to be integrated into education in a meaningful way.

One major factor is privacy - for much of LLM AI to work, it would need full access to what students have made:

not only does this potentially bring ownership issues into the picture but it would also likely include personal

information of students - a risk, especially for institutions with underage students5. Not only that,

but the ethical concern behind AI usage in academic settings: it is far too easy for students to generate entire

projects, with very few easy solutions for educators to stop it - it undermines what the people who are actually

completing the projects are doing, and doesn’t help to teach the people who are not doing the projects.

Separated from education or efficiency is a less talked about negative of excessive AI usage: as so many LLMs

are sycophantic in nature, the issues of reliance and AI psychosis are becoming bigger and bigger issues -

because of how they “believe” and validate everything someone may say, vulnerable individuals could spiral

further and further into delusions or violent ideation towards themselves or others.6 This is truly

an insidious and dangerous part of LLM usage - at the very minimum, four people have already died to

suicide after becoming heavily reliant on AI bots as they would echo back any of their thoughts: it could be

anything from confirming the delusions of someone to telling another that it is alright to die. A page

containing redirects to each of the four people and lawsuits versus OpenAI will be linked in the citations, as

that in of itself should be enough to convince one of the danger this technology poses.7

4. (Kosmyna et al., 2025)

5. (Office of Communications, 2024)

6. (Carlbring & Andersson, 2025)

7. (Conrad, n.d.)